Speedy production dates: an alternative to Hartmann's table?

eugeneandresson

·Hi @WurstEver (and friends)

Tonight I cobbled together a few lines of python (the quick bit). Last night I had the fun of going through the same process for scraping data (the not so quick bit), but I am sure you have got more / better data than me via PM and @kov (who removed his info 😀 I don't blame him at all).

That said, the last two kindly forum members who gave extracts here I used as a first test for my cobbling.

Here is what I get (polynomial order in '()').

@lando has 265518XX with Extract for October 1968 (1968 / 10)

I get :

(3) 1968 / 9

(4) 1968 / 8

(5) 1968 / 8

and

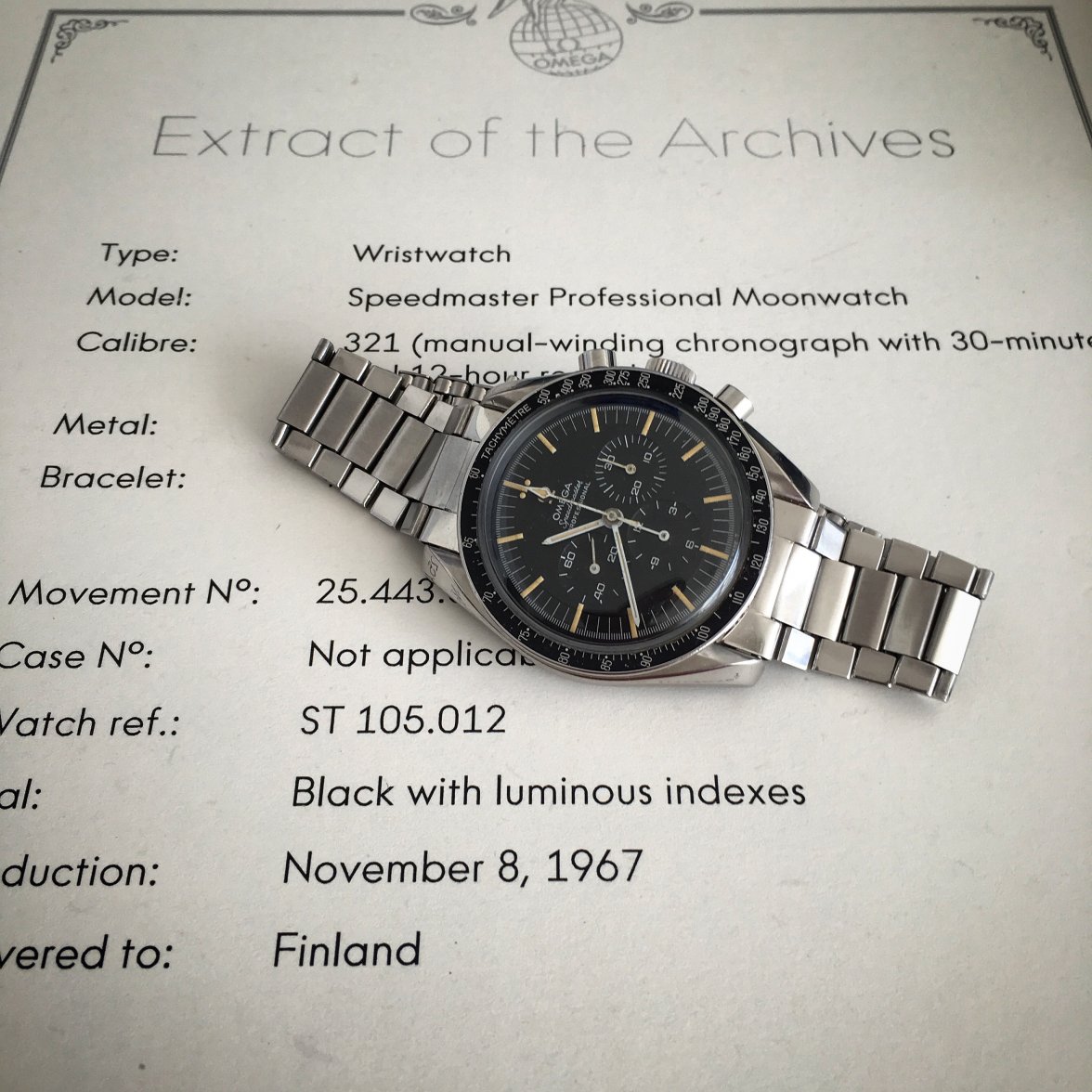

@XXoF has 25443XXX with Extract for November 1967 (1967 / 11)

I get :

(3) 1967 / 12

(4) 1967 / 11

(5) 1967 / 12

Not too bad. But still off. But I do have some samples close by to these, so am tasting this with a few grains of salt. Polynomial order was just for interests sake. I have only just begun thinking about this...still got some experimentation to do.

Some observations:

- I observe a couple of 'anomalies' of the complete serial number data (i.e. some increasing serial numbers have a decrease in date -> you have seen this too).

- I observe quite a lot more of these 'anomalies' where I have incomplete serial numbers (mostly 3/4 digits).

- i cant say for sure, but I feel that this might play some role in overall accuracy. I know the last 3 or 4 digits of 10 000 000 should statistically not make that much of a difference, but the techniques being used in the background most likely use derivatives in some way or other and any error here could have a projected effect accordingly...I admit I am OCD 😀 (Hint : would love it if the kind folks could give full SN's)

Anyways...

What does this all mean?

- There now exists a different implementation of the nice applied math idea you had to began this thread with (albeit with a different data set).

- You are not alone 😀

I would like to put this online under an open source license, including the data. Unless anybody strongly objects...

Best regards,

Eugene

PS: Another thing worth thinking about as a hybrid solution would be to pick a technique based on how close an unknown sample is to a known sample. I.e. if it is within 'Q' serial number samples of a nearest neighbor, use polynomial approach, otherwise use simple linear interpolation. How to choose Q? And how to get enough new data in to test this?

Tonight I cobbled together a few lines of python (the quick bit). Last night I had the fun of going through the same process for scraping data (the not so quick bit), but I am sure you have got more / better data than me via PM and @kov (who removed his info 😀 I don't blame him at all).

That said, the last two kindly forum members who gave extracts here I used as a first test for my cobbling.

Here is what I get (polynomial order in '()').

@lando has 265518XX with Extract for October 1968 (1968 / 10)

I get :

(3) 1968 / 9

(4) 1968 / 8

(5) 1968 / 8

and

@XXoF has 25443XXX with Extract for November 1967 (1967 / 11)

I get :

(3) 1967 / 12

(4) 1967 / 11

(5) 1967 / 12

Not too bad. But still off. But I do have some samples close by to these, so am tasting this with a few grains of salt. Polynomial order was just for interests sake. I have only just begun thinking about this...still got some experimentation to do.

Some observations:

- I observe a couple of 'anomalies' of the complete serial number data (i.e. some increasing serial numbers have a decrease in date -> you have seen this too).

- I observe quite a lot more of these 'anomalies' where I have incomplete serial numbers (mostly 3/4 digits).

- i cant say for sure, but I feel that this might play some role in overall accuracy. I know the last 3 or 4 digits of 10 000 000 should statistically not make that much of a difference, but the techniques being used in the background most likely use derivatives in some way or other and any error here could have a projected effect accordingly...I admit I am OCD 😀 (Hint : would love it if the kind folks could give full SN's)

Anyways...

What does this all mean?

- There now exists a different implementation of the nice applied math idea you had to began this thread with (albeit with a different data set).

- You are not alone 😀

I would like to put this online under an open source license, including the data. Unless anybody strongly objects...

Best regards,

Eugene

PS: Another thing worth thinking about as a hybrid solution would be to pick a technique based on how close an unknown sample is to a known sample. I.e. if it is within 'Q' serial number samples of a nearest neighbor, use polynomial approach, otherwise use simple linear interpolation. How to choose Q? And how to get enough new data in to test this?

WurstEver

·Can you put up a graph with all the new points shown?

Yep, given all the new observations that have come in since the last major update, I think it's time to consolidate. I'll do an update of the data set and a full analysis of the accuracy of the different methods against everything we know soon.

Not too bad. But still off. But I do have some samples close by to these, so am tasting this with a few grains of salt. Polynomial order was just for interests sake. I have only just begun thinking about this...still got some experimentation to do

Love your work, Eugene! If I'm reading this correctly, your outcomes indicate that (1) the polynomial models performed quite well, and (2) adding terms to the model didn't yield appreciably better performance? Very interesting.

PS: Another thing worth thinking about as a hybrid solution would be to pick a technique based on how close an unknown sample is to a known sample. I.e. if it is within 'Q' serial number samples of a nearest neighbor, use polynomial approach, otherwise use simple linear interpolation. How to choose Q? And how to get enough new data

Yep, I agree this is the question we need to tackle next.On the question of how to get new data, @JMH76 had a couple of good ideas above. Great stuff!

WurstEver

·- I observe quite a lot more of these 'anomalies' where I have incomplete serial numbers (mostly 3/4 digits).

- i cant say for sure, but I feel that this might play some role in overall accuracy. I know the last 3 or 4 digits of 10 000 000 should statistically not make that much of a difference, but the techniques being used in the background most likely use derivatives in some way or other and any error here could have a projected effect accordingly...

Oh, I think you're probably right @eugeneandresson. Of the cases that I have been working with so far, only about 45% have full serial numbers. The rest are typically only the first 4-5 digits. As I explained in the OP, my approach has been to simply replace unknown digits with zeros. I haven't given a lot of thought (yet) to what impact that might have - apart from reducing the accuracy of the predictions - or whether there would be a better approach But if anyone has any thoughts I'd be keen to hear 'em! As we converge on a stable approach, it might be worth investigating little tweaks like this in order to squeeze out every last little bit of accuracy from the procedure

eugeneandresson

·@WurstEver I implemented another method of doing this (using 'standard score' (https://en.wikipedia.org/wiki/Standard_score) and looking up the data in transformed coordinate space), and now I get:

265518XX : 1968 / 10.0

25443XXX : 1967 / 11.0

So 2/2 correct 😀 With incomplete serials.

Would appreciate some more serial numbers (i.e. those which gave you a problem a while ago) to see how this fares...PM them to me (EDIT : please 😀 )? I can send you the results...

265518XX : 1968 / 10.0

25443XXX : 1967 / 11.0

So 2/2 correct 😀 With incomplete serials.

Would appreciate some more serial numbers (i.e. those which gave you a problem a while ago) to see how this fares...PM them to me (EDIT : please 😀 )? I can send you the results...

Edited:

WurstEver

·Interesting, @eugeneandresson! I'd not thought of transforming the data before doing the estimation. Often it's useful to transform raw data to conform more closely to a normal distribution prior to running certain inferential tests, so maybe there's similar benefits here? I'm honestly not sure. So you're transforming the known serials and dates (?) to z-scores (using the mean and stdev of the sample you've collected?), presumably also expressing the search serial as a z-score (?) and then using those to do what kind of lookup? Different from the methods we've been applying to the raw data? Keen to hear the details when you get a chance. Anyhoo, way to think outside the box! 👍

WurstEver

·Just had a thought, @eugeneandresson: What does the transformation do to the shape of the scatterplot? Does the plot of z(serial) by z(month number) end up looking roughly linear across the whole range? This could be the 'active ingredient' in any observed performance improvement... Definitely worth looking into Nice work!

Edited:

WurstEver

·MINOR UPDATE:

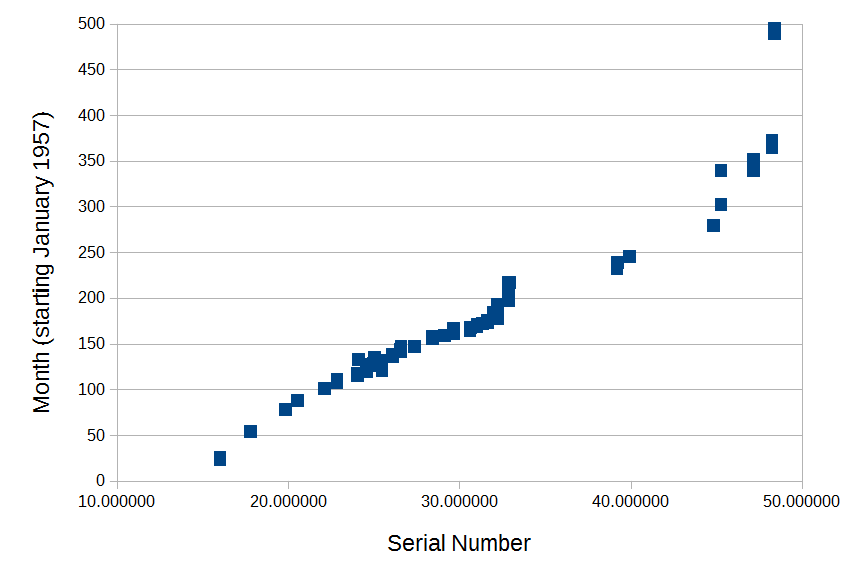

I've finished entering the data that has been posted in this thread and sent via PM over the last couple of weeks. Thanks so very much to everyone who has contributed! Below is a scatterplot of the entire dataset. There are now 105 pairs of serial numbers and extract production dates. For most of these, I have a photograph of the extract.

I'm now in the middle of doing an updated analysis of the prediction methods that we've been discussing in this thread; and in particular, looking at how to use the methods together to get the most accurate prediction of production date from serial number possible. Suggestions more than welcome on this matter (indeed, on anything related to this analysis). The data are raising some questions though - for example, where are all the Speedy Pros in the serial number ranges 33-39 million and 40-44 million?? If there is anyone out there with watches in either range, please do get it touch. Perhaps those movement numbers simply weren't used for Speedy Pros ... Or perhaps people are less likely to get extracts for watches from around that period and this is just sampling bias ...

While the outcomes of these analyses so far aren't 100% perfect (and indeed, probably never will be) I'm becoming reasonably confident that in most cases we will be able to do better than the estimates than have been previously available without an extract. And it's all thanks to a fantastic OF community effort! 👍

I've finished entering the data that has been posted in this thread and sent via PM over the last couple of weeks. Thanks so very much to everyone who has contributed! Below is a scatterplot of the entire dataset. There are now 105 pairs of serial numbers and extract production dates. For most of these, I have a photograph of the extract.

I'm now in the middle of doing an updated analysis of the prediction methods that we've been discussing in this thread; and in particular, looking at how to use the methods together to get the most accurate prediction of production date from serial number possible. Suggestions more than welcome on this matter (indeed, on anything related to this analysis). The data are raising some questions though - for example, where are all the Speedy Pros in the serial number ranges 33-39 million and 40-44 million?? If there is anyone out there with watches in either range, please do get it touch. Perhaps those movement numbers simply weren't used for Speedy Pros ... Or perhaps people are less likely to get extracts for watches from around that period and this is just sampling bias ...

While the outcomes of these analyses so far aren't 100% perfect (and indeed, probably never will be) I'm becoming reasonably confident that in most cases we will be able to do better than the estimates than have been previously available without an extract. And it's all thanks to a fantastic OF community effort! 👍

Edited:

eugeneandresson

·Been busy, weather has been great, and @WurstEver's questions/replies didn't trickle into my email for some reason, hence my silence 😀 Congrats @WurstEver getting so many data points 👍

---------------------------------------

To try answer your questions :

'And then using what to do the lookup?' -> A very funky inverse distance weighting interpolation algorithm...which was ported from Fortran to C++ a few years ago, but didn't feel like porting it to python (or putting what I have done for this from python into C++). But I did port a simpler version. Scipy has several sub libraries for all sorts of elegant and fancy interpolations as such, I also tried many of those.

'What does the transformation do to the shape of the scatterplot? Does the plot of z(serial) by z(month number) end up looking roughly linear across the whole range?' -> Unfortunately this data generally does not have too much of an irregular shape, so even though this technique is a very good one, its not good for this whole data set. There is also not really enough random data (but there are regions of the data that start to look like this...). The trick is to use a local neighborhood (and naturally to get more data).

---------------------------------------

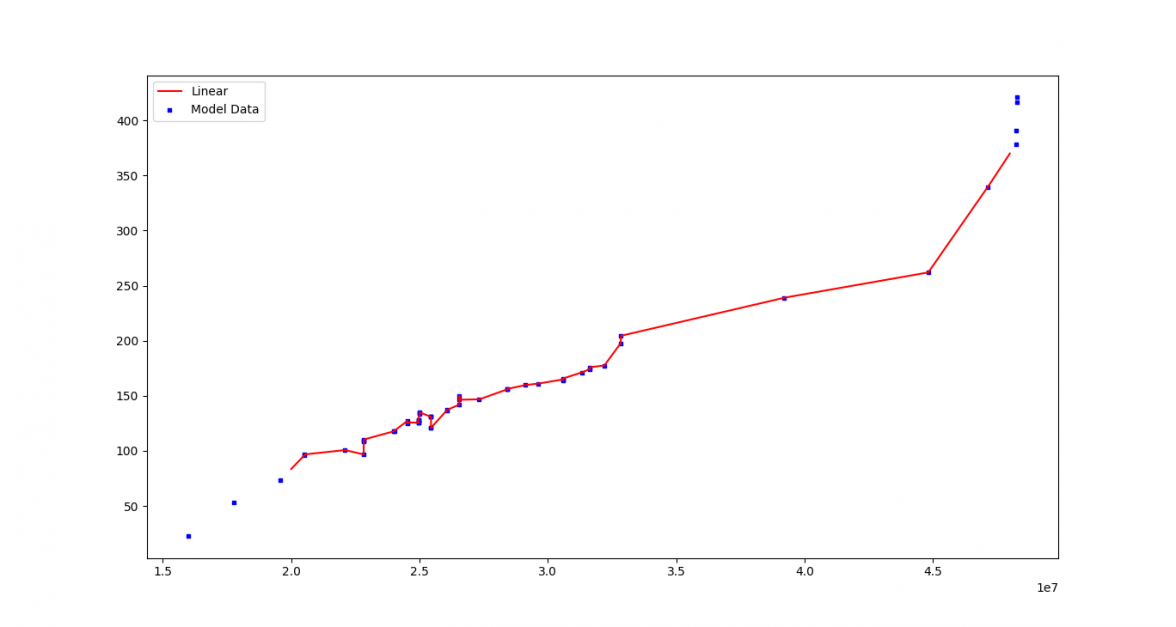

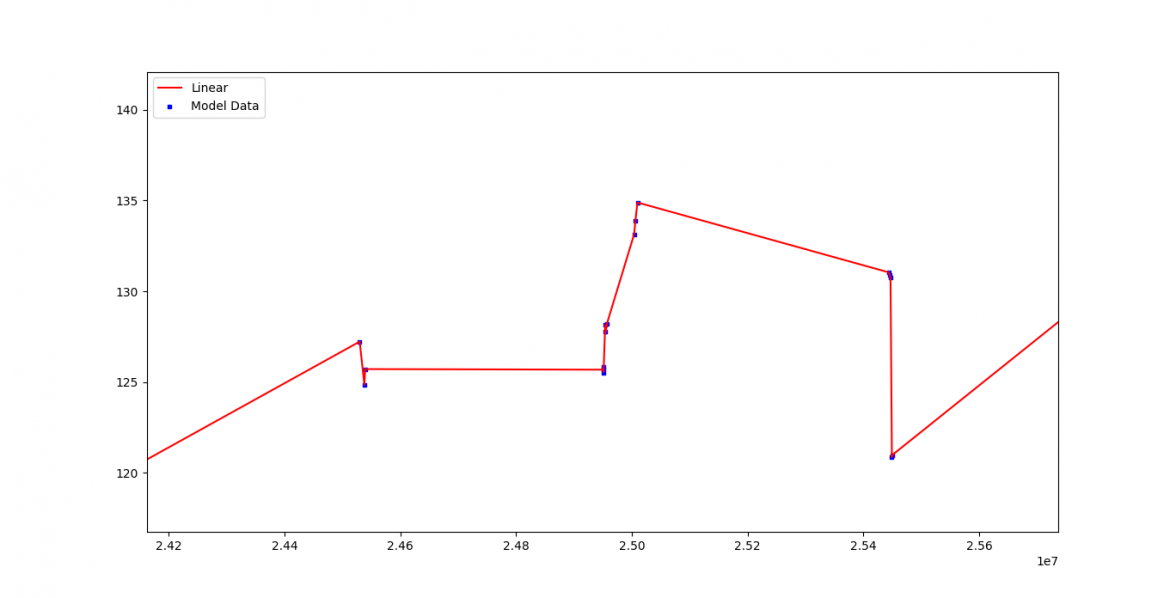

So what did I get up to? Well, here goes.

Linear interpolation seems to work well for sparse data (it is the best guess if there are only 2 points spanning 1 or 2 years for example).

In the regions where there is a lot of data, or with 'contradictions' per se (i.e. scattered points where random-movement-escaped-the-bin-in-non-sequential-order took place, or even better a lot of them), the z-transformation / inverse distance weighted neighborhood lookup IMHO is a better approach. At present, I seem to get the same results whether i do Z-transform or not, but I don't have that many samples, so I leave it in (further tweaking).

The trick I think is to pick a local neighborhood correctly. Naturally it makes sense to e.g. only look at the samples within +/-6 months for example from the closest sample to the serial number one wants to lookup (provided there are so many samples) for this approach. Or one can simply pick a number (but for regions where the data is very sparse that will include 'bad' points that will degrade that accuracy).

So I currently have several approaches. The inverse distance weighted algorithm is tweak-able. Its also 1D or 2D. The neighborhood selection is also tweak-able (have multiple approaches here). It gets daunting with so many options 😀

Concurrently running is a simple linear interpolation, the outlined z-transform / inverse distance weighting method and a hybridization of the two (based on sparseness of points (very trivial to implement)). I have totally stopped looking at polynomials.

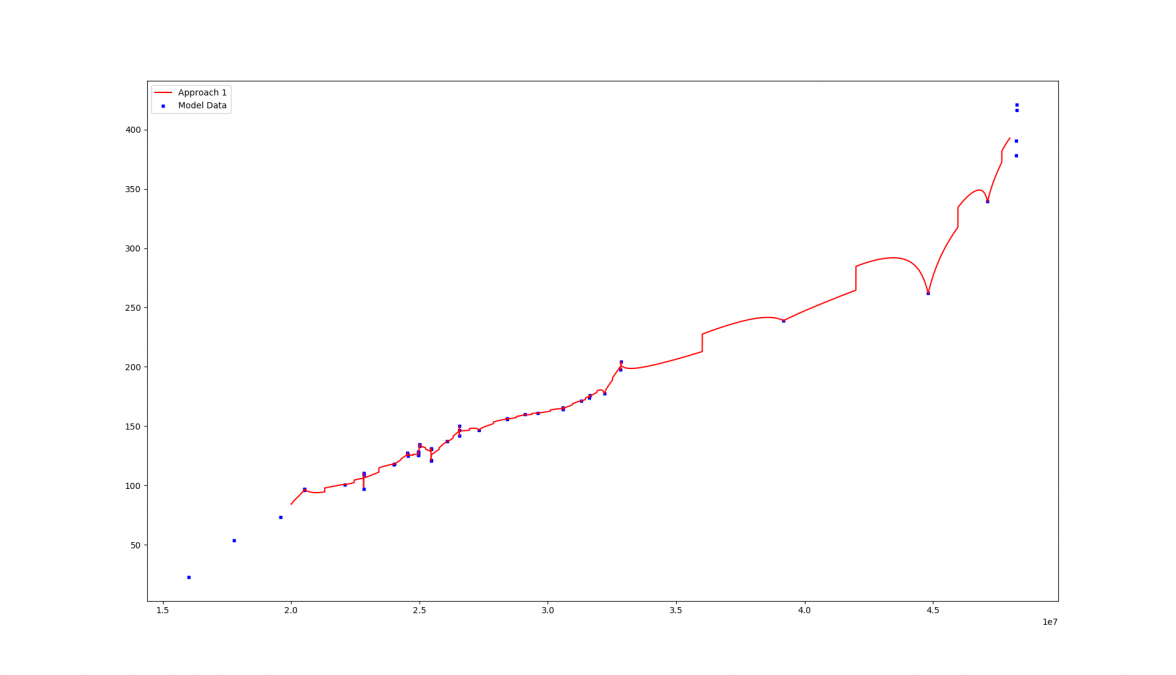

Function for Lin. Inter.

Zoomed into a 'funky' region

Function for Z-transform with IDW

I don't zoom into the interesting bit, here, because its the same for the hybrid approach.

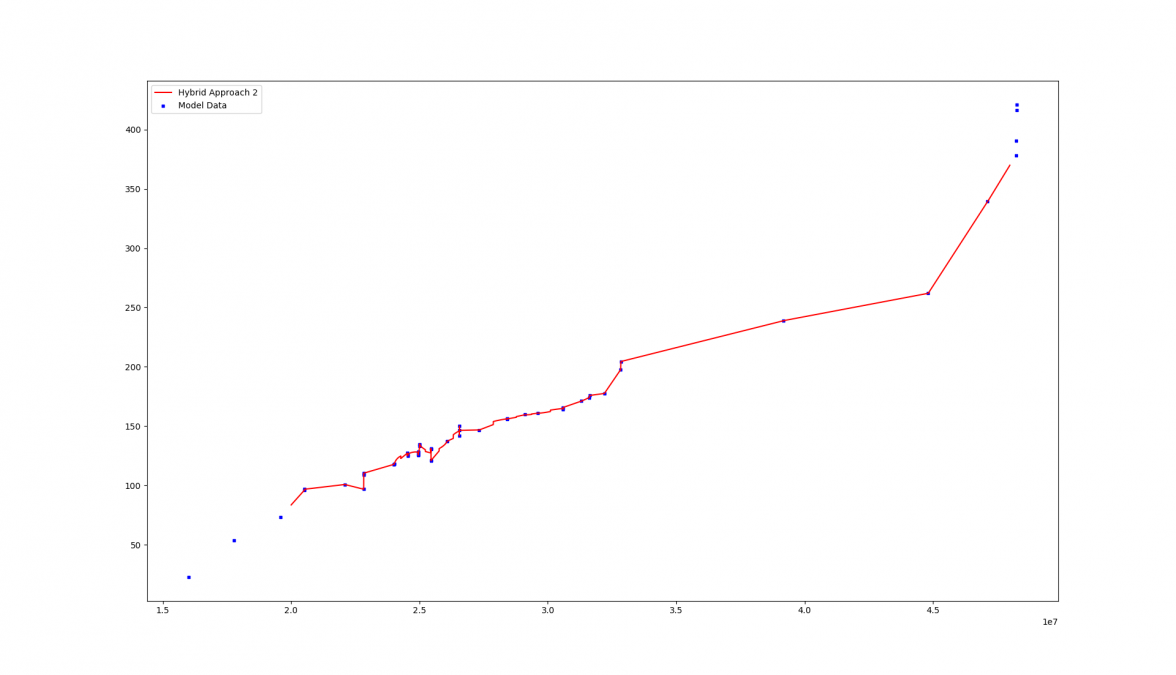

Function for Hybrid Approach (from far looks like Linear Interpolation)

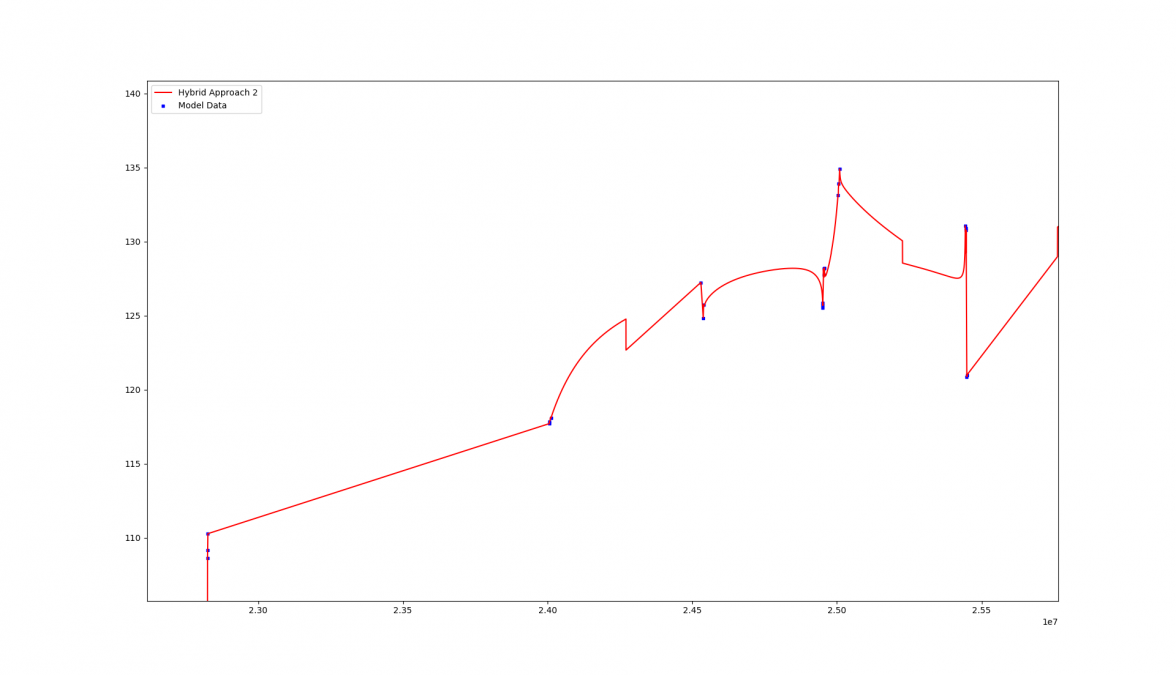

But when looking at the same interesting region :

I received some data from another kindly OF member via PM -> the '77 Speedy...👍

I see @Banner Roar added some data, and I forgot to take a look at it, so used that.

I now have 4 data points that are not in the model (ideally these would get incorporated).

Here are movement numbers : archive extract dates i.e. the expected answers.

265518XX : 1968 / 10

25443XXX : 1967 / 11

39925152 : 1977 / 9

3283XXXX : 1973 / 12

Here are the results (and errors).

3rd Order Polynomial:

26551800 = 1968 / 9 (-1 month)

25443000 = 1967 / 12 (+1 month)

39925152 = 1977 / 8 (-1 month)

32830000 = 1972 / 7 (-17 months) -> totally wrong year...

Linear Interpolation :

26551800 = 1968 / 10.0 (spot on)

25443000 = 1967 / 11.0 (spot on)

32830000 = 1973 / 5.0 (-7 months)

39925152 = 1977 / 2.0 (-7 months)

Statistical Approach 1:

26551800 = 1968 / 10 (0 months)

25443000 = 1967 / 10 (-1 months)

39925152 = 1977 / 6 (-3 months)

32830000 = 1973 / 7 (-5 months)

Hybrid Approach 2 :

26551800 = 1968 / 10.0 (0 months)

25443000 = 1967 / 10.0 (-1 months)

32830000 = 1973 / 5.0 (-7 months)

39925152 = 1977 / 2.0 (-7 months)

Its clear to see the polynomial performs worst. Linear interpolation is not bad. Statistical Approach 1 is looking best. And Hybrid Approach 2 (with current parameters) is looking worst.

Keep in mind the model is weak (15 years from '58 to '73 ---> 49 good data points, 19 years from '73 to '92 ---> 8 good data points)

and there are only 4 test points. As more data is added to the model (including random-bin-chaos) the last 2 algorithms should get more accurate. It's expected that the hybrid approach should perform better (as it covers most scenarios better and should 'get the best of both' so to speak), not sure why it doesn't yet...something I am missing...

I would really appreciate more data! Please! The difference in your dataset (@WurstEver, I am talking to you 😎) and mine would be great test points for injection (and vice versa, I am certain we have some different points, well, you more than me)...

If any other folk made it this far, I apologize for the looooong message. I tried to keep it short 😀 And naturally, all data, ideas, comments are welcome!

Best regards,

Eugene

---------------------------------------

To try answer your questions :

'And then using what to do the lookup?' -> A very funky inverse distance weighting interpolation algorithm...which was ported from Fortran to C++ a few years ago, but didn't feel like porting it to python (or putting what I have done for this from python into C++). But I did port a simpler version. Scipy has several sub libraries for all sorts of elegant and fancy interpolations as such, I also tried many of those.

'What does the transformation do to the shape of the scatterplot? Does the plot of z(serial) by z(month number) end up looking roughly linear across the whole range?' -> Unfortunately this data generally does not have too much of an irregular shape, so even though this technique is a very good one, its not good for this whole data set. There is also not really enough random data (but there are regions of the data that start to look like this...). The trick is to use a local neighborhood (and naturally to get more data).

---------------------------------------

So what did I get up to? Well, here goes.

Linear interpolation seems to work well for sparse data (it is the best guess if there are only 2 points spanning 1 or 2 years for example).

In the regions where there is a lot of data, or with 'contradictions' per se (i.e. scattered points where random-movement-escaped-the-bin-in-non-sequential-order took place, or even better a lot of them), the z-transformation / inverse distance weighted neighborhood lookup IMHO is a better approach. At present, I seem to get the same results whether i do Z-transform or not, but I don't have that many samples, so I leave it in (further tweaking).

The trick I think is to pick a local neighborhood correctly. Naturally it makes sense to e.g. only look at the samples within +/-6 months for example from the closest sample to the serial number one wants to lookup (provided there are so many samples) for this approach. Or one can simply pick a number (but for regions where the data is very sparse that will include 'bad' points that will degrade that accuracy).

So I currently have several approaches. The inverse distance weighted algorithm is tweak-able. Its also 1D or 2D. The neighborhood selection is also tweak-able (have multiple approaches here). It gets daunting with so many options 😀

Concurrently running is a simple linear interpolation, the outlined z-transform / inverse distance weighting method and a hybridization of the two (based on sparseness of points (very trivial to implement)). I have totally stopped looking at polynomials.

Function for Lin. Inter.

Zoomed into a 'funky' region

Function for Z-transform with IDW

I don't zoom into the interesting bit, here, because its the same for the hybrid approach.

Function for Hybrid Approach (from far looks like Linear Interpolation)

But when looking at the same interesting region :

I received some data from another kindly OF member via PM -> the '77 Speedy...👍

I see @Banner Roar added some data, and I forgot to take a look at it, so used that.

I now have 4 data points that are not in the model (ideally these would get incorporated).

Here are movement numbers : archive extract dates i.e. the expected answers.

265518XX : 1968 / 10

25443XXX : 1967 / 11

39925152 : 1977 / 9

3283XXXX : 1973 / 12

Here are the results (and errors).

3rd Order Polynomial:

26551800 = 1968 / 9 (-1 month)

25443000 = 1967 / 12 (+1 month)

39925152 = 1977 / 8 (-1 month)

32830000 = 1972 / 7 (-17 months) -> totally wrong year...

Linear Interpolation :

26551800 = 1968 / 10.0 (spot on)

25443000 = 1967 / 11.0 (spot on)

32830000 = 1973 / 5.0 (-7 months)

39925152 = 1977 / 2.0 (-7 months)

Statistical Approach 1:

26551800 = 1968 / 10 (0 months)

25443000 = 1967 / 10 (-1 months)

39925152 = 1977 / 6 (-3 months)

32830000 = 1973 / 7 (-5 months)

Hybrid Approach 2 :

26551800 = 1968 / 10.0 (0 months)

25443000 = 1967 / 10.0 (-1 months)

32830000 = 1973 / 5.0 (-7 months)

39925152 = 1977 / 2.0 (-7 months)

Its clear to see the polynomial performs worst. Linear interpolation is not bad. Statistical Approach 1 is looking best. And Hybrid Approach 2 (with current parameters) is looking worst.

Keep in mind the model is weak (15 years from '58 to '73 ---> 49 good data points, 19 years from '73 to '92 ---> 8 good data points)

and there are only 4 test points. As more data is added to the model (including random-bin-chaos) the last 2 algorithms should get more accurate. It's expected that the hybrid approach should perform better (as it covers most scenarios better and should 'get the best of both' so to speak), not sure why it doesn't yet...something I am missing...

I would really appreciate more data! Please! The difference in your dataset (@WurstEver, I am talking to you 😎) and mine would be great test points for injection (and vice versa, I am certain we have some different points, well, you more than me)...

If any other folk made it this far, I apologize for the looooong message. I tried to keep it short 😀 And naturally, all data, ideas, comments are welcome!

Best regards,

Eugene

Edited:

eugeneandresson

·To the kind soul that gifted the test data, thank you very much! Here is a quick run of a whole bunch more test values (not incorporated into the model)...everything higher than '85 is ignored (most people here don't care about that in any case)...

format :

>>> serial prediction (actual) Error : in months (accumulated error at the end of each run)

**** Linear ****

>>> 26551800 = 1968 / 10.0 ( 1968/10/30 ) Error : 0.06620261728 months

>>> 25443000 = 1967 / 11.0 ( 1967/11/8 ) Error : -0.763252391541 months

>>> 32193764 = 1971 / 9.0 ( 1971/10/8 ) Error : -0.114064046471 months

>>> 32830000 = 1973 / 5.0 ( 1973/12/1 ) Error : 5.7660295521 months

>>> 39925152 = 1977 / 2.0 ( 1977/9/1 ) Error : 6.21523581682 months

>>> 31622000 = 1971 / 7.0 ( 1971/8/16 ) Error : 0.680136240947 months

>>> 30598000 = 1970 / 10.0 ( 1970/12/16 ) Error : 1.83898878765 months

>>> 22824000 = 1965 / 1.0 ( 1965/12/20 ) Error : 10.9126634154 months

>>> 24530000 = 1967 / 7.0 ( 1966/12/27 ) Error : -7.3314988148 months

>>> 26079000 = 1968 / 5.0 ( 1968/7/2 ) Error : 0.841275708722 months

>>> 19832000 = 1963 / 7.0 ( 1963/6/5 ) Error : -2.3274449314 months

>>> 26077000 = 1968 / 5.0 ( 1968/6/7 ) Error : 0.107990143636 months

>>> 29636000 = 1970 / 5.0 ( 1970/6/8 ) Error : 0.223321579461 months

>>> 29112000 = 1970 / 4.0 ( 1970/3/12 ) Error : -1.29475342794 months

Accumulated Error : 38.4828574742 months

**** Approach 1 ****

>>> 26551800 = 1968 / 10.0 ( 1968/10/30 ) Error : [-0.08553981] months

>>> 25443000 = 1967 / 10.0 ( 1967/11/8 ) Error : [ 0.09247382] months

>>> 32193764 = 1971 / 10.0 ( 1971/10/8 ) Error : [-0.5194193] months

>>> 32830000 = 1973 / 7.0 ( 1973/12/1 ) Error : [ 3.64766273] months

>>> 39925152 = 1977 / 6.0 ( 1977/9/1 ) Error : [ 1.7125164] months

>>> 31622000 = 1971 / 7.0 ( 1971/8/16 ) Error : [ 0.70175474] months

>>> 30598000 = 1970 / 10.0 ( 1970/12/16 ) Error : [ 1.94408995] months

>>> 22824000 = 1965 / 7.0 ( 1965/12/20 ) Error : [ 4.56719176] months

>>> 24530000 = 1967 / 7.0 ( 1966/12/27 ) Error : [-7.33149881] months

>>> 26079000 = 1968 / 5.0 ( 1968/7/2 ) Error : [ 0.92155251] months

>>> 19832000 = 1963 / 10.0 ( 1963/6/5 ) Error : [-5.10445993] months

>>> 26077000 = 1968 / 5.0 ( 1968/6/7 ) Error : [ 0.10585481] months

>>> 29636000 = 1970 / 5.0 ( 1970/6/8 ) Error : [ 0.2761651] months

>>> 29112000 = 1970 / 4.0 ( 1970/3/12 ) Error : [-1.28906345] months

Accumulated Error : [ 28.29924313]

**** Hybrid Approach 2 ****

>>> 26551800 = 1968 / 10.0 ( 1968/10/30 ) Error : [-0.08638238] months

>>> 25443000 = 1967 / 10.0 ( 1967/11/8 ) Error : [ 0.71371186] months

>>> 32193764 = 1971 / 9.0 ( 1971/10/8 ) Error : [-0.07861259] months

>>> 32830000 = 1973 / 5.0 ( 1973/12/1 ) Error : 5.7660295521 months

>>> 39925152 = 1977 / 2.0 ( 1977/9/1 ) Error : 6.21523581682 months

>>> 31622000 = 1971 / 7.0 ( 1971/8/16 ) Error : [ 0.6689169] months

>>> 30598000 = 1970 / 10.0 ( 1970/12/16 ) Error : [ 1.95077049] months

>>> 22824000 = 1965 / 1.0 ( 1965/12/20 ) Error : [ 10.91277806] months

>>> 24530000 = 1967 / 7.0 ( 1966/12/27 ) Error : [-7.33149881] months

>>> 26079000 = 1968 / 5.0 ( 1968/7/2 ) Error : [ 1.01430403] months

>>> 19832000 = 1963 / 7.0 ( 1963/6/5 ) Error : -2.3274449314 months

>>> 26077000 = 1968 / 5.0 ( 1968/6/7 ) Error : [ 0.14948734] months

>>> 29636000 = 1970 / 5.0 ( 1970/6/8 ) Error : [ 0.37149638] months

>>> 29112000 = 1970 / 4.0 ( 1970/3/12 ) Error : [-1.28906345] months

Accumulated Error : [ 38.87573259]

Note : a lot of the serials have got last 3 digits missing.

It seems that all 3 approaches got 1 value year incorrect (SN 24530000)...cant yet say why. Need to analyze (but not now).

Seems the statistical approach is winning.

I plan on making this available soon (need to clean it up a bit) for members of the forum (perhaps @kov can host an interactive version 😀 ) ...

Happy weekend.

format :

>>> serial prediction (actual) Error : in months (accumulated error at the end of each run)

**** Linear ****

>>> 26551800 = 1968 / 10.0 ( 1968/10/30 ) Error : 0.06620261728 months

>>> 25443000 = 1967 / 11.0 ( 1967/11/8 ) Error : -0.763252391541 months

>>> 32193764 = 1971 / 9.0 ( 1971/10/8 ) Error : -0.114064046471 months

>>> 32830000 = 1973 / 5.0 ( 1973/12/1 ) Error : 5.7660295521 months

>>> 39925152 = 1977 / 2.0 ( 1977/9/1 ) Error : 6.21523581682 months

>>> 31622000 = 1971 / 7.0 ( 1971/8/16 ) Error : 0.680136240947 months

>>> 30598000 = 1970 / 10.0 ( 1970/12/16 ) Error : 1.83898878765 months

>>> 22824000 = 1965 / 1.0 ( 1965/12/20 ) Error : 10.9126634154 months

>>> 24530000 = 1967 / 7.0 ( 1966/12/27 ) Error : -7.3314988148 months

>>> 26079000 = 1968 / 5.0 ( 1968/7/2 ) Error : 0.841275708722 months

>>> 19832000 = 1963 / 7.0 ( 1963/6/5 ) Error : -2.3274449314 months

>>> 26077000 = 1968 / 5.0 ( 1968/6/7 ) Error : 0.107990143636 months

>>> 29636000 = 1970 / 5.0 ( 1970/6/8 ) Error : 0.223321579461 months

>>> 29112000 = 1970 / 4.0 ( 1970/3/12 ) Error : -1.29475342794 months

Accumulated Error : 38.4828574742 months

**** Approach 1 ****

>>> 26551800 = 1968 / 10.0 ( 1968/10/30 ) Error : [-0.08553981] months

>>> 25443000 = 1967 / 10.0 ( 1967/11/8 ) Error : [ 0.09247382] months

>>> 32193764 = 1971 / 10.0 ( 1971/10/8 ) Error : [-0.5194193] months

>>> 32830000 = 1973 / 7.0 ( 1973/12/1 ) Error : [ 3.64766273] months

>>> 39925152 = 1977 / 6.0 ( 1977/9/1 ) Error : [ 1.7125164] months

>>> 31622000 = 1971 / 7.0 ( 1971/8/16 ) Error : [ 0.70175474] months

>>> 30598000 = 1970 / 10.0 ( 1970/12/16 ) Error : [ 1.94408995] months

>>> 22824000 = 1965 / 7.0 ( 1965/12/20 ) Error : [ 4.56719176] months

>>> 24530000 = 1967 / 7.0 ( 1966/12/27 ) Error : [-7.33149881] months

>>> 26079000 = 1968 / 5.0 ( 1968/7/2 ) Error : [ 0.92155251] months

>>> 19832000 = 1963 / 10.0 ( 1963/6/5 ) Error : [-5.10445993] months

>>> 26077000 = 1968 / 5.0 ( 1968/6/7 ) Error : [ 0.10585481] months

>>> 29636000 = 1970 / 5.0 ( 1970/6/8 ) Error : [ 0.2761651] months

>>> 29112000 = 1970 / 4.0 ( 1970/3/12 ) Error : [-1.28906345] months

Accumulated Error : [ 28.29924313]

**** Hybrid Approach 2 ****

>>> 26551800 = 1968 / 10.0 ( 1968/10/30 ) Error : [-0.08638238] months

>>> 25443000 = 1967 / 10.0 ( 1967/11/8 ) Error : [ 0.71371186] months

>>> 32193764 = 1971 / 9.0 ( 1971/10/8 ) Error : [-0.07861259] months

>>> 32830000 = 1973 / 5.0 ( 1973/12/1 ) Error : 5.7660295521 months

>>> 39925152 = 1977 / 2.0 ( 1977/9/1 ) Error : 6.21523581682 months

>>> 31622000 = 1971 / 7.0 ( 1971/8/16 ) Error : [ 0.6689169] months

>>> 30598000 = 1970 / 10.0 ( 1970/12/16 ) Error : [ 1.95077049] months

>>> 22824000 = 1965 / 1.0 ( 1965/12/20 ) Error : [ 10.91277806] months

>>> 24530000 = 1967 / 7.0 ( 1966/12/27 ) Error : [-7.33149881] months

>>> 26079000 = 1968 / 5.0 ( 1968/7/2 ) Error : [ 1.01430403] months

>>> 19832000 = 1963 / 7.0 ( 1963/6/5 ) Error : -2.3274449314 months

>>> 26077000 = 1968 / 5.0 ( 1968/6/7 ) Error : [ 0.14948734] months

>>> 29636000 = 1970 / 5.0 ( 1970/6/8 ) Error : [ 0.37149638] months

>>> 29112000 = 1970 / 4.0 ( 1970/3/12 ) Error : [-1.28906345] months

Accumulated Error : [ 38.87573259]

Note : a lot of the serials have got last 3 digits missing.

It seems that all 3 approaches got 1 value year incorrect (SN 24530000)...cant yet say why. Need to analyze (but not now).

Seems the statistical approach is winning.

I plan on making this available soon (need to clean it up a bit) for members of the forum (perhaps @kov can host an interactive version 😀 ) ...

Happy weekend.

Edited:

kov

·Great job 😉

I am open to host it, indeed 👍

I am open to host it, indeed 👍

WurstEver

·If members find this interesting and would like to share the details of their extracts I'd be happy to incorporate new observations into the data set and see if this goes anywhere.

So, I guess it looks like this might be going somewhere! 😀

To facilitate development I have set up a Dropbox containing my data set (n=105). To keep this as a community effort, I'm happy to share these data with OF members who plan on contributing to the analyses on the understanding that any statistical techniques or insights derived from them are shared back with the community in this thread and in the hope that you'll upload more data as you find it so that this file can serve as a common repository of information. This will help to ensure that everyone has access to as big a data set as possible, that we're all working from the same baseline, and that we're not all spearing off in different directions, reinventing the wheel, and rediscovering old problems.

The data in the file are de-identified, so that the anonymity of the folks who volunteered their information is preserved. For the same reason, I have not uploaded the extract images that I have found or been PMed for many of these watches. If folks uploading data would kindly hold on to any images that they have, and make a note in the data file indicating that they have the images (there are columns set aside for that information), then we can always track the images down on a case-by-case basis if there is some pressing need to do so. If anyone who has sent me information is not comfortable with me making their data available to other OF members, even in an anonymised form, please just let me know.

I am hoping that Dropbox will do a reasonable job of version control. We'll see... Please PM me if you are interested in doing some analyses and would like access to the data. Feedback on the structure and content of the data file are of course welcome - perhaps that would best be discussed here in the thread.

It is amazing to me how much progress has been made in so little time. This has been great fun and it is a fantastic demonstration of the power of collaboration. Thanks everyone for your contributions!! 👍

JMH76

·Ignore this

eugeneandresson

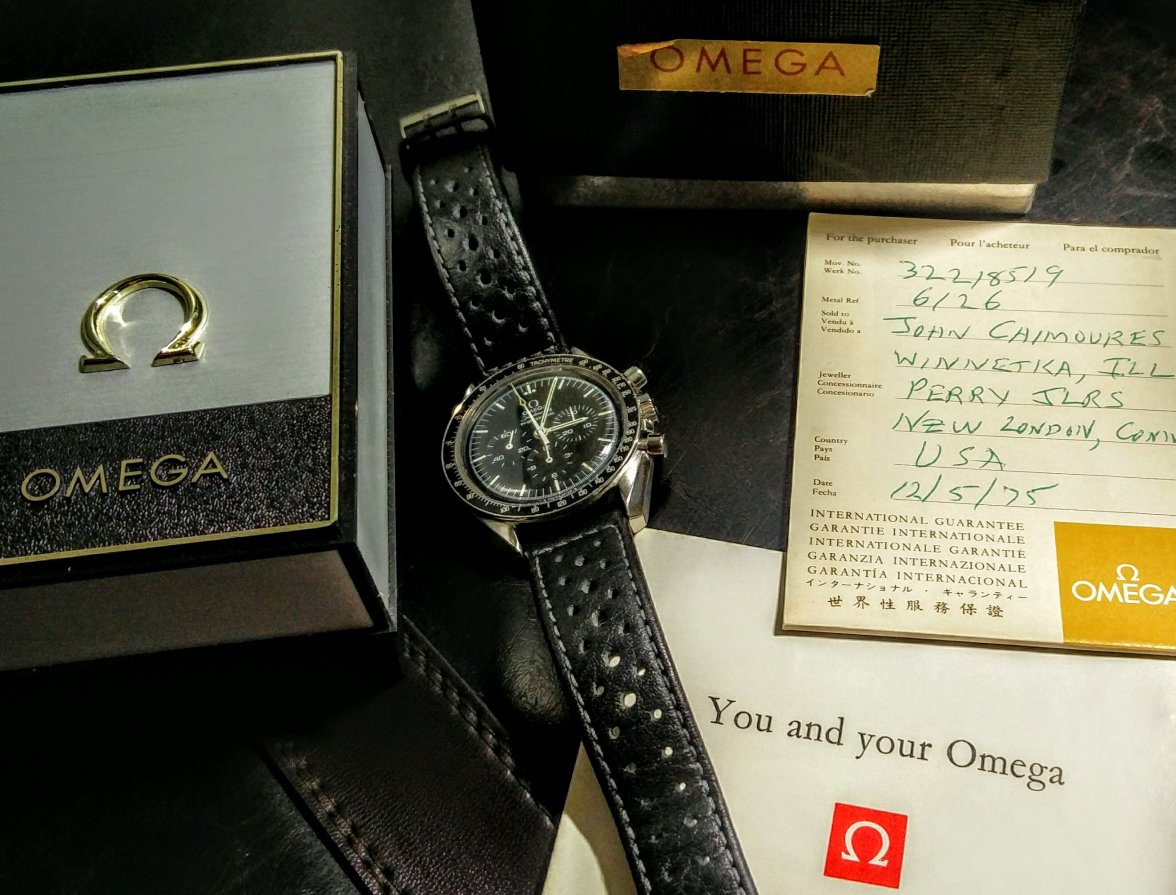

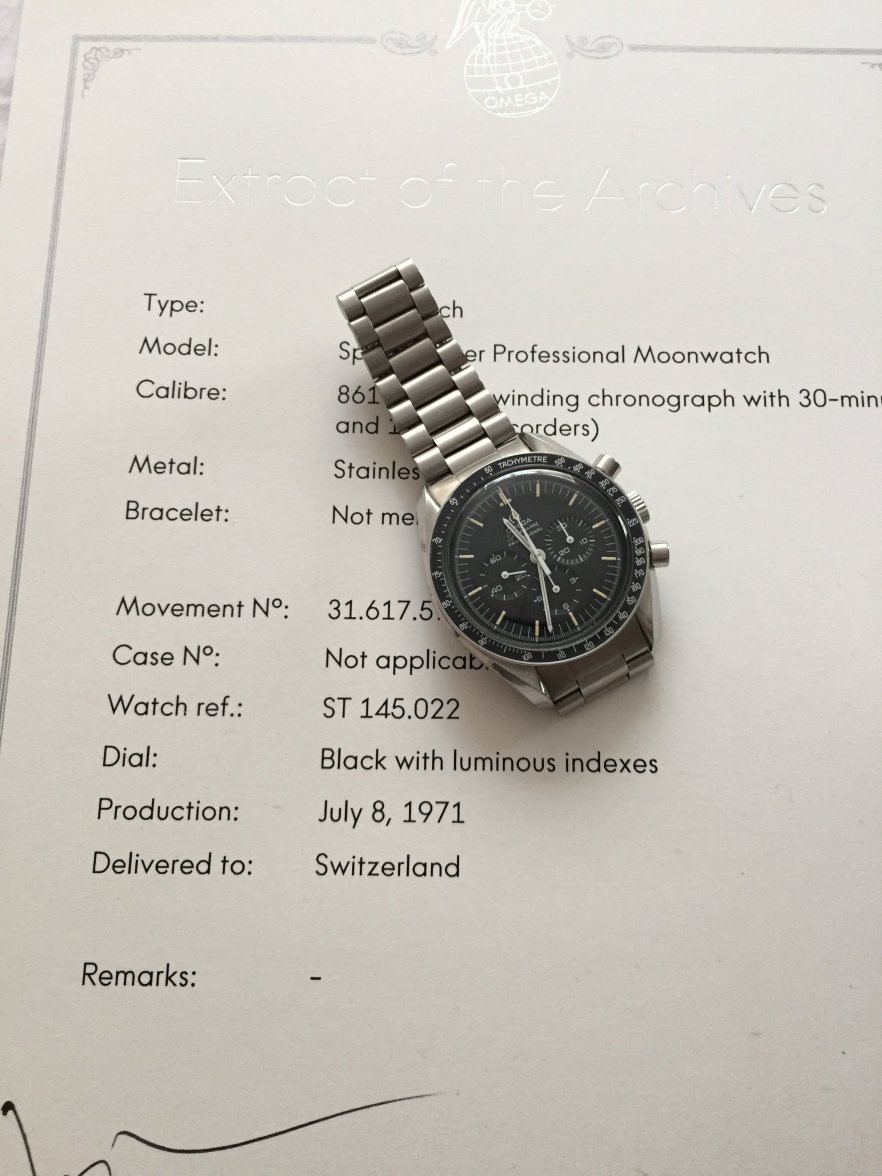

·Nice watch 👍

This is what the crystal ball tells me when I feed it your number :

>>> 32218519 = 1971 / 10.0 ( Linear )

>>> 32218519 = 1971 / 11.0 ( Stats )

>>> 32218519 = 1971 / 9.0 ( Hybrid )

We don't know which is correct 😀 Lets say average of those 3 would be 1971 / 10 ...

This is what the crystal ball tells me when I feed it your number :

>>> 32218519 = 1971 / 10.0 ( Linear )

>>> 32218519 = 1971 / 11.0 ( Stats )

>>> 32218519 = 1971 / 9.0 ( Hybrid )

We don't know which is correct 😀 Lets say average of those 3 would be 1971 / 10 ...

Edited:

WurstEver

·Here are the results (and errors).

3rd Order Polynomial:

26551800 = 1968 / 9 (-1 month)

25443000 = 1967 / 12 (+1 month)

39925152 = 1977 / 8 (-1 month)

32830000 = 1972 / 7 (-17 months) -> totally wrong year...

Linear Interpolation :

26551800 = 1968 / 10.0 (spot on)

25443000 = 1967 / 11.0 (spot on)

32830000 = 1973 / 5.0 (-7 months)

39925152 = 1977 / 2.0 (-7 months)

Statistical Approach 1:

26551800 = 1968 / 10 (0 months)

25443000 = 1967 / 10 (-1 months)

39925152 = 1977 / 6 (-3 months)

32830000 = 1973 / 7 (-5 months)

Hybrid Approach 2 :

26551800 = 1968 / 10.0 (0 months)

25443000 = 1967 / 10.0 (-1 months)

32830000 = 1973 / 5.0 (-7 months)

39925152 = 1977 / 2.0 (-7 months)

So the most accurate results for each of these four watches are:

265518XX : exact match; linear, statistical, hybrid

25443XXX : exact match; linear

39925152 : -1 month; polynomial

3283XXXX : -5 months; statistical

Very cool stuff! This is a lovely example of why the best approach might end up incorporating a choice or trade-off between two or more estimation methods based on what is known; for example, serial number ranges or proximity of the 'search' serial to known data points.

It's interesting to note that the best prediction for one of these four watches was the polynomial method; which does not appear to perform as well as the more 'local' estimation methods on average. Just for interest, I ran a recent polynomial model against the longer list of 14 watches in @eugeneandresson's post above and found that it yielded smaller errors than the other three methods for two of the watches in that list. It occurrs to me that these results might be telling us something about how we should be thinking about the performance of our methods. Specifically, if we are aiming for an optimal mix of methods, it's probably just as important to discover the conditions under which each method performs well as it is to evaluate the accuracy of each method on average.

WurstEver

·Hello,. Very cool what you all have done. I'm going to order an extract for my Straight Writing.

Based on serial number in pic can you tell me the date predicted by the model?

The 32s are a tricky spot, so this will be interesting. For what it's worth, in the large data set we have a 32199xxx that is known to be from October 1971 and a 32217xxx that is known to be from September 1972.

eugeneandresson

·Apologies for the delayed update. Been having stonking good summer weather here, so been enjoying a bit of R&R...cheers!

It was supposed to get cold and turn miserable, but alas working at my desk is still very slippery, thus it hasn't yet....the swimmable water calls. Regardless, I poked around tonight shortly...

@WurstEver yeah, there are some tricky spots. Don't see an easy way around these (but then haven't given it any thought yet). Easy to have hindsight when the answer is there (i.e. as in the test values with known dates), but when one doesn't know the answer (i.e. when we have thrown every known sample into a model, and new queries are unknown), how to chose the correct method? Regarding the test you ran using polynomials : did you remove the duplicate data points from your model before you tested these? I ask as

- I am sure you have a lot of these points in your model (i.e. I am sure the same kind soul who sent them to me also sent them to you)

- If they are in the model, the exact dates should be returned by it. I have explicitly not incorporated these datapoints in the model yet...

That brings me to the bothersome SN : 24530000 I mentioned in my last post. I figured out the problem. Its a tricky spot to do with incomplete data on two fronts.

Firstly, in my model I have the following two samples :

A) 24538069, 1967/5/26 (this corresponds to an image I found where everything is visible)

B) 2453XXXX, 1967/8/7 (this corresponds to an image I found where the last 4 digits are not visible)

And then in the test data I have been given (thanks once again to the donor 👍)

C) 24530XXX, 1966/12/27 (this corresponds to an image I was PM'd, where the last 3 digits are not visible).

Clearly B and C, when replacing 'X's with '0's, become the same SN, but at two different points in time. This becomes a non-linear problem with two correct answers. And we cant have that as most of us don't have time machines yet (I still beleive some of the honourable Speedmaster hoarders on this site do 😗).

The answer given was : >>> 24530000 = 1967 / 5.0, which is acceptable as one of the answers. But clearly B should be removed, as the 4th unknown significant digit can make all the difference...and I suspect that is where the problem lies...

Complete data is king!

Anyhoo. Then I also had a few more ideas which I quickly implemented.

1) How to improve the Hybrid method some (i.e. bring it closer to the stats method) -> my previous results were not expected.

2) Use Xth order polyfit in the local neighbourhood as opposed to over the entire model (3rd order gave best results, I tried a bunch).

Then I thought, seeing as there are a bunch of methods all running concurrently, lets add the original Xth order polyfit over the entire model just for comparison (again, 3rd order seems to give best results, I tried a bunch).

Here are my the findings :

**** Linear ****

>>> 26551800 = 1968 / 10.0 ( 1968/10/30 ) Error : 0.06620261728 months

>>> 25443000 = 1967 / 11.0 ( 1967/11/8 ) Error : -0.763252391541 months

>>> 32193764 = 1971 / 9.0 ( 1971/10/8 ) Error : -0.114064046471 months

>>> 32830000 = 1973 / 5.0 ( 1973/12/1 ) Error : 5.7660295521 months

>>> 39925152 = 1977 / 2.0 ( 1977/9/1 ) Error : 6.21523581682 months

>>> 31622000 = 1971 / 7.0 ( 1971/8/16 ) Error : 0.680136240947 months

>>> 30598000 = 1970 / 10.0 ( 1970/12/16 ) Error : 1.83898878765 months

>>> 22824000 = 1965 / 1.0 ( 1965/12/20 ) Error : 10.9126634154 months

>>> 24530000 = 1967 / 5.0 ( 1966/12/27 ) Error : -4.82805783247 months

>>> 26079000 = 1968 / 5.0 ( 1968/7/2 ) Error : 0.841275708722 months

>>> 19832000 = 1963 / 7.0 ( 1963/6/5 ) Error : -2.3274449314 months

>>> 26077000 = 1968 / 5.0 ( 1968/6/7 ) Error : 0.107990143636 months

>>> 29636000 = 1970 / 5.0 ( 1970/6/8 ) Error : 0.223321579461 months

>>> 29112000 = 1970 / 4.0 ( 1970/3/12 ) Error : -1.29475342794 months

Accumulated Error : 35.9794164919 months ( Av. 2.56995832085 month per lookup)

**** Approach 1 (Stats) ****

>>> 26551800 = 1968 / 10.0 ( 1968/10/30 ) Error : [-0.08553981] months

>>> 25443000 = 1967 / 10.0 ( 1967/11/8 ) Error : [ 0.09247382] months

>>> 32193764 = 1971 / 10.0 ( 1971/10/8 ) Error : [-0.5194193] months

>>> 32830000 = 1973 / 7.0 ( 1973/12/1 ) Error : [ 3.64766273] months

>>> 39925152 = 1977 / 6.0 ( 1977/9/1 ) Error : [ 1.7125164] months

>>> 31622000 = 1971 / 7.0 ( 1971/8/16 ) Error : [ 0.70175474] months

>>> 30598000 = 1970 / 10.0 ( 1970/12/16 ) Error : [ 1.94408995] months

>>> 22824000 = 1965 / 7.0 ( 1965/12/20 ) Error : [ 4.56719176] months

>>> 24530000 = 1967 / 5.0 ( 1966/12/27 ) Error : [-5.17238503] months

>>> 26079000 = 1968 / 5.0 ( 1968/7/2 ) Error : [ 0.92155251] months

>>> 19832000 = 1963 / 10.0 ( 1963/6/5 ) Error : [-5.10445993] months

>>> 26077000 = 1968 / 5.0 ( 1968/6/7 ) Error : [ 0.10585481] months

>>> 29636000 = 1970 / 5.0 ( 1970/6/8 ) Error : [ 0.2761651] months

>>> 29112000 = 1970 / 4.0 ( 1970/3/12 ) Error : [-1.28906345] months

Accumulated Error : [ 26.14012935] months ( Av. [ 1.8671521] month per lookup)

**** Hybrid Approach 2 ****

>>> 26551800 = 1968 / 10.0 ( 1968/10/30 ) Error : [-0.08553939] months

>>> 25443000 = 1967 / 10.0 ( 1967/11/8 ) Error : [ 0.09247282] months

>>> 32193764 = 1971 / 10.0 ( 1971/10/8 ) Error : [-0.5194193] months

>>> 32830000 = 1973 / 5.0 ( 1973/12/1 ) Error : 5.7660295521 months

>>> 39925152 = 1977 / 2.0 ( 1977/9/1 ) Error : 6.21523581682 months

>>> 31622000 = 1971 / 7.0 ( 1971/8/16 ) Error : [ 0.70175474] months

>>> 30598000 = 1970 / 10.0 ( 1970/12/16 ) Error : [ 1.94408995] months

>>> 22824000 = 1965 / 7.0 ( 1965/12/20 ) Error : [ 4.56739464] months

>>> 24530000 = 1967 / 5.0 ( 1966/12/27 ) Error : [-5.17238503] months

>>> 26079000 = 1968 / 5.0 ( 1968/7/2 ) Error : [ 0.92155251] months

>>> 19832000 = 1963 / 7.0 ( 1963/6/5 ) Error : -2.3274449314 months

>>> 26077000 = 1968 / 5.0 ( 1968/6/7 ) Error : [ 0.10585481] months

>>> 29636000 = 1970 / 5.0 ( 1970/6/8 ) Error : [ 0.2761651] months

>>> 29112000 = 1970 / 4.0 ( 1970/3/12 ) Error : [-1.28906345] months

Accumulated Error : [ 29.98440204] months ( Av. [ 2.141743] month per lookup)

**** PolyfitWindow ****

>>> 26551800 = 1969 / 2.0 ( 1968/10/30 ) Error : -4.0782567508 months

>>> 25443000 = 1968 / 3.0 ( 1967/11/8 ) Error : -4.22725554581 months

>>> 32193764 = 1972 / 0.0 ( 1971/10/8 ) Error : -3.17961077267 months

>>> 32830000 = 1973 / 6.0 ( 1973/12/1 ) Error : 4.69083193818 months

>>> 39925152 = 1976 / 10.0 ( 1977/9/1 ) Error : 10.4778567528 months

>>> 31622000 = 1971 / 6.0 ( 1971/8/16 ) Error : 1.78600928536 months

>>> 30598000 = 1970 / 9.0 ( 1970/12/16 ) Error : 2.62364222994 months

>>> 22824000 = 1965 / 7.0 ( 1965/12/20 ) Error : 4.22379842438 months

>>> 24530000 = 1967 / 5.0 ( 1966/12/27 ) Error : -5.40543572407 months

>>> 26079000 = 1968 / 4.0 ( 1968/7/2 ) Error : 2.07641244964 months

>>> 19832000 = 1963 / 8.0 ( 1963/6/5 ) Error : -2.68052909146 months

>>> 26077000 = 1968 / 5.0 ( 1968/6/7 ) Error : 0.379679403857 months

>>> 29636000 = 1970 / 5.0 ( 1970/6/8 ) Error : 0.288988346695 months

>>> 29112000 = 1970 / 4.0 ( 1970/3/12 ) Error : -1.30260171252 months

Accumulated Error : 47.4209084282 months ( Av. 3.38720774487 month per lookup)

**** Polyfit ****

>>> 26551800 = 1968 / 9.0 ( 1968/10/30 ) Error : 0.782834304973 months

>>> 25443000 = 1968 / 1.0 ( 1967/11/8 ) Error : -2.66447946796 months

>>> 32193764 = 1971 / 10.0 ( 1971/10/8 ) Error : -0.638409514793 months

>>> 32830000 = 1972 / 2.0 ( 1973/12/1 ) Error : 21.0952853108 months

>>> 39925152 = 1976 / 11.0 ( 1977/9/1 ) Error : 8.86793282974 months

>>> 31622000 = 1971 / 6.0 ( 1971/8/16 ) Error : 1.23112233592 months

>>> 30598000 = 1970 / 12.0 ( 1970/12/16 ) Error : -0.343219010597 months

>>> 22824000 = 1966 / 2.0 ( 1965/12/20 ) Error : -2.7133030685 months

>>> 24530000 = 1967 / 6.0 ( 1966/12/27 ) Error : -5.71692287597 months

>>> 26079000 = 1968 / 6.0 ( 1968/7/2 ) Error : 0.297263851107 months

>>> 19832000 = 1963 / 6.0 ( 1963/6/5 ) Error : -0.375203935805 months

>>> 26077000 = 1968 / 6.0 ( 1968/6/7 ) Error : -0.509791556429 months

>>> 29636000 = 1970 / 6.0 ( 1970/6/8 ) Error : -0.575092675453 months

>>> 29112000 = 1970 / 3.0 ( 1970/3/12 ) Error : -0.131612033496 months

Accumulated Error : 45.9424727715 months ( Av. 3.28160519797 month per lookup)

As can be seen, the Statistical and Hybrid Approaches both improved, and converge...

...IS NICE!

Now time to try some data from the 80's (I presently have very few samples here)...no doubt some more careful ponderings will be required. More soon...

PS: @WurstEver thanks for making your data available. I havent grabbed it yet ... I will look at using the differences between our datasets (yours should have 1.5x better resolution than mine) to further test after 80's analysis...

It was supposed to get cold and turn miserable, but alas working at my desk is still very slippery, thus it hasn't yet....the swimmable water calls. Regardless, I poked around tonight shortly...

@WurstEver yeah, there are some tricky spots. Don't see an easy way around these (but then haven't given it any thought yet). Easy to have hindsight when the answer is there (i.e. as in the test values with known dates), but when one doesn't know the answer (i.e. when we have thrown every known sample into a model, and new queries are unknown), how to chose the correct method? Regarding the test you ran using polynomials : did you remove the duplicate data points from your model before you tested these? I ask as

- I am sure you have a lot of these points in your model (i.e. I am sure the same kind soul who sent them to me also sent them to you)

- If they are in the model, the exact dates should be returned by it. I have explicitly not incorporated these datapoints in the model yet...

That brings me to the bothersome SN : 24530000 I mentioned in my last post. I figured out the problem. Its a tricky spot to do with incomplete data on two fronts.

Firstly, in my model I have the following two samples :

A) 24538069, 1967/5/26 (this corresponds to an image I found where everything is visible)

B) 2453XXXX, 1967/8/7 (this corresponds to an image I found where the last 4 digits are not visible)

And then in the test data I have been given (thanks once again to the donor 👍)

C) 24530XXX, 1966/12/27 (this corresponds to an image I was PM'd, where the last 3 digits are not visible).

Clearly B and C, when replacing 'X's with '0's, become the same SN, but at two different points in time. This becomes a non-linear problem with two correct answers. And we cant have that as most of us don't have time machines yet (I still beleive some of the honourable Speedmaster hoarders on this site do 😗).

The answer given was : >>> 24530000 = 1967 / 5.0, which is acceptable as one of the answers. But clearly B should be removed, as the 4th unknown significant digit can make all the difference...and I suspect that is where the problem lies...

Complete data is king!

Anyhoo. Then I also had a few more ideas which I quickly implemented.

1) How to improve the Hybrid method some (i.e. bring it closer to the stats method) -> my previous results were not expected.

2) Use Xth order polyfit in the local neighbourhood as opposed to over the entire model (3rd order gave best results, I tried a bunch).

Then I thought, seeing as there are a bunch of methods all running concurrently, lets add the original Xth order polyfit over the entire model just for comparison (again, 3rd order seems to give best results, I tried a bunch).

Here are my the findings :

**** Linear ****

>>> 26551800 = 1968 / 10.0 ( 1968/10/30 ) Error : 0.06620261728 months

>>> 25443000 = 1967 / 11.0 ( 1967/11/8 ) Error : -0.763252391541 months

>>> 32193764 = 1971 / 9.0 ( 1971/10/8 ) Error : -0.114064046471 months

>>> 32830000 = 1973 / 5.0 ( 1973/12/1 ) Error : 5.7660295521 months

>>> 39925152 = 1977 / 2.0 ( 1977/9/1 ) Error : 6.21523581682 months

>>> 31622000 = 1971 / 7.0 ( 1971/8/16 ) Error : 0.680136240947 months

>>> 30598000 = 1970 / 10.0 ( 1970/12/16 ) Error : 1.83898878765 months

>>> 22824000 = 1965 / 1.0 ( 1965/12/20 ) Error : 10.9126634154 months

>>> 24530000 = 1967 / 5.0 ( 1966/12/27 ) Error : -4.82805783247 months

>>> 26079000 = 1968 / 5.0 ( 1968/7/2 ) Error : 0.841275708722 months

>>> 19832000 = 1963 / 7.0 ( 1963/6/5 ) Error : -2.3274449314 months

>>> 26077000 = 1968 / 5.0 ( 1968/6/7 ) Error : 0.107990143636 months

>>> 29636000 = 1970 / 5.0 ( 1970/6/8 ) Error : 0.223321579461 months

>>> 29112000 = 1970 / 4.0 ( 1970/3/12 ) Error : -1.29475342794 months

Accumulated Error : 35.9794164919 months ( Av. 2.56995832085 month per lookup)

**** Approach 1 (Stats) ****

>>> 26551800 = 1968 / 10.0 ( 1968/10/30 ) Error : [-0.08553981] months

>>> 25443000 = 1967 / 10.0 ( 1967/11/8 ) Error : [ 0.09247382] months

>>> 32193764 = 1971 / 10.0 ( 1971/10/8 ) Error : [-0.5194193] months

>>> 32830000 = 1973 / 7.0 ( 1973/12/1 ) Error : [ 3.64766273] months

>>> 39925152 = 1977 / 6.0 ( 1977/9/1 ) Error : [ 1.7125164] months

>>> 31622000 = 1971 / 7.0 ( 1971/8/16 ) Error : [ 0.70175474] months

>>> 30598000 = 1970 / 10.0 ( 1970/12/16 ) Error : [ 1.94408995] months

>>> 22824000 = 1965 / 7.0 ( 1965/12/20 ) Error : [ 4.56719176] months

>>> 24530000 = 1967 / 5.0 ( 1966/12/27 ) Error : [-5.17238503] months

>>> 26079000 = 1968 / 5.0 ( 1968/7/2 ) Error : [ 0.92155251] months

>>> 19832000 = 1963 / 10.0 ( 1963/6/5 ) Error : [-5.10445993] months

>>> 26077000 = 1968 / 5.0 ( 1968/6/7 ) Error : [ 0.10585481] months

>>> 29636000 = 1970 / 5.0 ( 1970/6/8 ) Error : [ 0.2761651] months

>>> 29112000 = 1970 / 4.0 ( 1970/3/12 ) Error : [-1.28906345] months

Accumulated Error : [ 26.14012935] months ( Av. [ 1.8671521] month per lookup)

**** Hybrid Approach 2 ****

>>> 26551800 = 1968 / 10.0 ( 1968/10/30 ) Error : [-0.08553939] months

>>> 25443000 = 1967 / 10.0 ( 1967/11/8 ) Error : [ 0.09247282] months

>>> 32193764 = 1971 / 10.0 ( 1971/10/8 ) Error : [-0.5194193] months

>>> 32830000 = 1973 / 5.0 ( 1973/12/1 ) Error : 5.7660295521 months

>>> 39925152 = 1977 / 2.0 ( 1977/9/1 ) Error : 6.21523581682 months

>>> 31622000 = 1971 / 7.0 ( 1971/8/16 ) Error : [ 0.70175474] months

>>> 30598000 = 1970 / 10.0 ( 1970/12/16 ) Error : [ 1.94408995] months

>>> 22824000 = 1965 / 7.0 ( 1965/12/20 ) Error : [ 4.56739464] months

>>> 24530000 = 1967 / 5.0 ( 1966/12/27 ) Error : [-5.17238503] months

>>> 26079000 = 1968 / 5.0 ( 1968/7/2 ) Error : [ 0.92155251] months

>>> 19832000 = 1963 / 7.0 ( 1963/6/5 ) Error : -2.3274449314 months

>>> 26077000 = 1968 / 5.0 ( 1968/6/7 ) Error : [ 0.10585481] months

>>> 29636000 = 1970 / 5.0 ( 1970/6/8 ) Error : [ 0.2761651] months

>>> 29112000 = 1970 / 4.0 ( 1970/3/12 ) Error : [-1.28906345] months

Accumulated Error : [ 29.98440204] months ( Av. [ 2.141743] month per lookup)

**** PolyfitWindow ****

>>> 26551800 = 1969 / 2.0 ( 1968/10/30 ) Error : -4.0782567508 months

>>> 25443000 = 1968 / 3.0 ( 1967/11/8 ) Error : -4.22725554581 months

>>> 32193764 = 1972 / 0.0 ( 1971/10/8 ) Error : -3.17961077267 months

>>> 32830000 = 1973 / 6.0 ( 1973/12/1 ) Error : 4.69083193818 months

>>> 39925152 = 1976 / 10.0 ( 1977/9/1 ) Error : 10.4778567528 months

>>> 31622000 = 1971 / 6.0 ( 1971/8/16 ) Error : 1.78600928536 months

>>> 30598000 = 1970 / 9.0 ( 1970/12/16 ) Error : 2.62364222994 months

>>> 22824000 = 1965 / 7.0 ( 1965/12/20 ) Error : 4.22379842438 months

>>> 24530000 = 1967 / 5.0 ( 1966/12/27 ) Error : -5.40543572407 months

>>> 26079000 = 1968 / 4.0 ( 1968/7/2 ) Error : 2.07641244964 months

>>> 19832000 = 1963 / 8.0 ( 1963/6/5 ) Error : -2.68052909146 months

>>> 26077000 = 1968 / 5.0 ( 1968/6/7 ) Error : 0.379679403857 months

>>> 29636000 = 1970 / 5.0 ( 1970/6/8 ) Error : 0.288988346695 months

>>> 29112000 = 1970 / 4.0 ( 1970/3/12 ) Error : -1.30260171252 months

Accumulated Error : 47.4209084282 months ( Av. 3.38720774487 month per lookup)

**** Polyfit ****

>>> 26551800 = 1968 / 9.0 ( 1968/10/30 ) Error : 0.782834304973 months

>>> 25443000 = 1968 / 1.0 ( 1967/11/8 ) Error : -2.66447946796 months

>>> 32193764 = 1971 / 10.0 ( 1971/10/8 ) Error : -0.638409514793 months

>>> 32830000 = 1972 / 2.0 ( 1973/12/1 ) Error : 21.0952853108 months

>>> 39925152 = 1976 / 11.0 ( 1977/9/1 ) Error : 8.86793282974 months

>>> 31622000 = 1971 / 6.0 ( 1971/8/16 ) Error : 1.23112233592 months

>>> 30598000 = 1970 / 12.0 ( 1970/12/16 ) Error : -0.343219010597 months

>>> 22824000 = 1966 / 2.0 ( 1965/12/20 ) Error : -2.7133030685 months

>>> 24530000 = 1967 / 6.0 ( 1966/12/27 ) Error : -5.71692287597 months

>>> 26079000 = 1968 / 6.0 ( 1968/7/2 ) Error : 0.297263851107 months

>>> 19832000 = 1963 / 6.0 ( 1963/6/5 ) Error : -0.375203935805 months

>>> 26077000 = 1968 / 6.0 ( 1968/6/7 ) Error : -0.509791556429 months

>>> 29636000 = 1970 / 6.0 ( 1970/6/8 ) Error : -0.575092675453 months

>>> 29112000 = 1970 / 3.0 ( 1970/3/12 ) Error : -0.131612033496 months

Accumulated Error : 45.9424727715 months ( Av. 3.28160519797 month per lookup)

As can be seen, the Statistical and Hybrid Approaches both improved, and converge...

...IS NICE!

Now time to try some data from the 80's (I presently have very few samples here)...no doubt some more careful ponderings will be required. More soon...

PS: @WurstEver thanks for making your data available. I havent grabbed it yet ... I will look at using the differences between our datasets (yours should have 1.5x better resolution than mine) to further test after 80's analysis...

Edited:

SpeedyPhill

·How does it all match up with:

https://omegaforums.net/threads/spe...umbers-from-the-moonwatch-only-website.58897/

😕

https://omegaforums.net/threads/spe...umbers-from-the-moonwatch-only-website.58897/

😕