Hi all! Thanks so much to everyone who has commented and contributed data. This is why OF is such a fantastic forum! Today I'm posting an updated data set that contains 76 serial number-production date pairs for Speedmaster Professionals in the 321-861-1861 family (up from 40 in the original data set). For most of these watches, I have a photo of the extract, so we can be fairly confident in the data. I have also developed an updated model on the basis of the expanded data and things are looking reasonably good

👍 Bottom line up front: while this curve-fitting approach is nowhere near perfect, and it is still very much a work in progress, it does still seem to be a promising method.

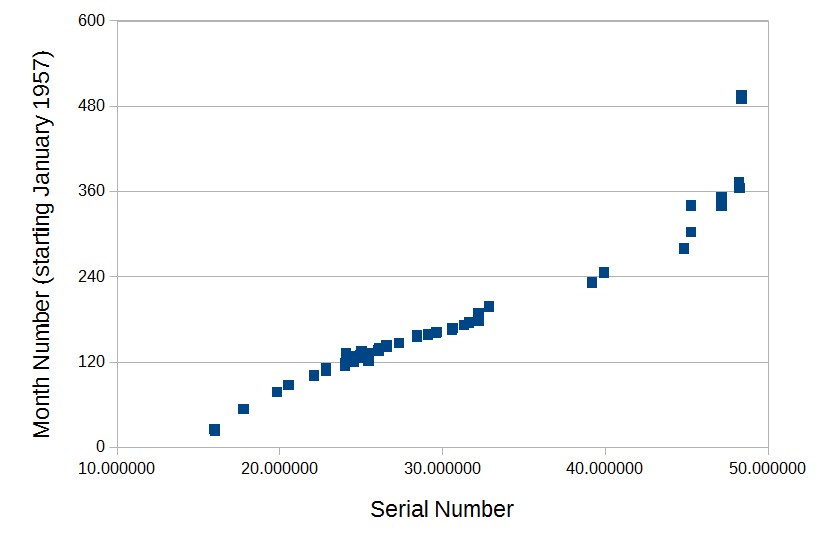

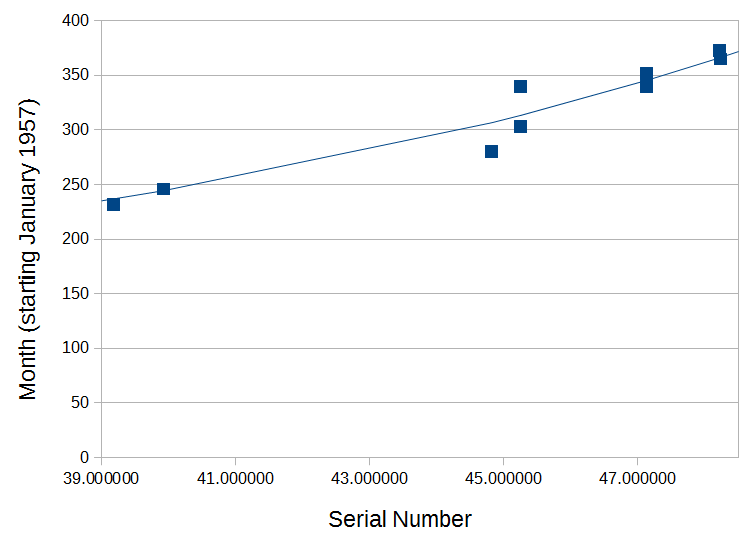

The entire data set (n= 76) is shown in the scatterplot below...

As with the original numbers, there is a roughly linear relationship between serial numbers and production dates throughout the 60s and 70s. The slope is different on the far left (low serial numbers, early years) and far right (high serial numbers, late years) and there appears to be more spread (ie., the relationship is less predictable) in the later years. The two extreme cases from the original data set (watches with production dates in the late 1990s) can still be seen in the top right corner.

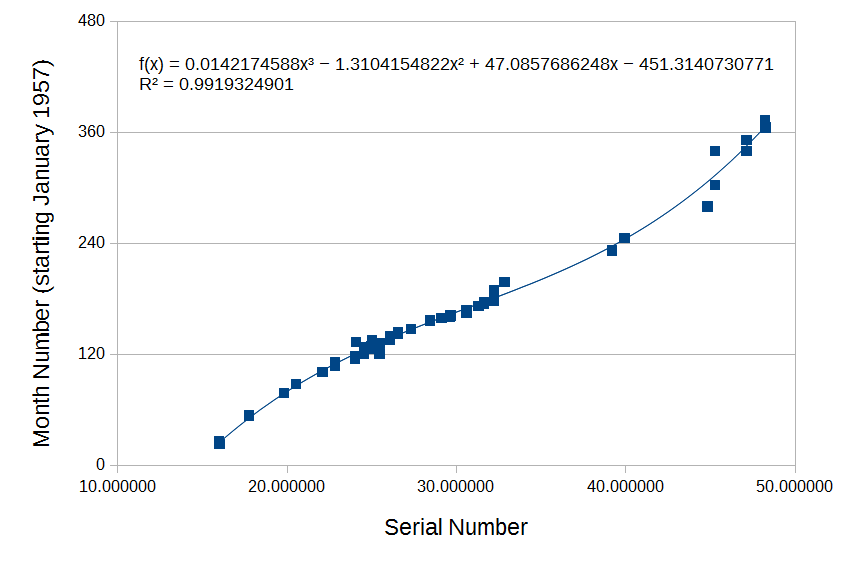

With the exception of those two watches from the mid-late 1990s, it appeared that the entire data set might be reasonably well fit by a slightly more complex curve than I had used in the original post; as was suggested by

@eugeneandresson. So, I removed those two cases - effectively reducing the sample size to n=74 and restricting subsequent model development to watches made before 1990 - and then fit the remaining data with a curve. A third-order polynomial yielded the fit shown below...

This model could be considered an improvement on the original linear model in the sense that it fits the updated data set quite well (R^2=0.99) and it accounts for a wider range of production dates than the original model. It's possible though that by expanding the scope of the model, we might be sacrificing some precision. So of course the key question is, how does it perform relative to Hartmann's table?

As with the first model, I used the new model to calculate a predicted month number (starting from January 1957) for each watch and then translated that into a predicted calendar year and calendar month using slightly modified equations from the ones I originally posted (which were generally fine, but turned out to misbehave a bit around December). I then compared the model's ability to predict production year with estimates derived from Hartmann's table. Across the whole data set, the new model outperformed the table. Overall, the model predicted just a touch under 70% of production years correctly (0.68), while Hartmann's table predicted around a half of the years correctly (0.51).

Breaking things down by time, the model outperformed the table for watches produced between 1957-1969 (accuracy = 0.74 versus 0.6) and for watches produced between 1970-79 (accuracy = 0.65 versus 0.41). Both methods were pretty rubbish at predicting production years from serial numbers between 1980-89, but the model still outperformed Hartmann's table (accuracy = 0.29 versus 0.14; just two correct versus one correct respectively). As the thread that was found by

@JMH76 suggests, something seems to have changed in the mid-80s and there's probably more work to do to get acceptable accuracy during that period.

The model and Hartmann's table agreed on production year about half the time (0.49). When the two methods agreed, that estimate was correct 75% of the time. When the two methods disagreed, the model was correct 60.5% of the time, Hartmann's table was correct 26.3% of the time, and both were wrong 13.2% of the time.

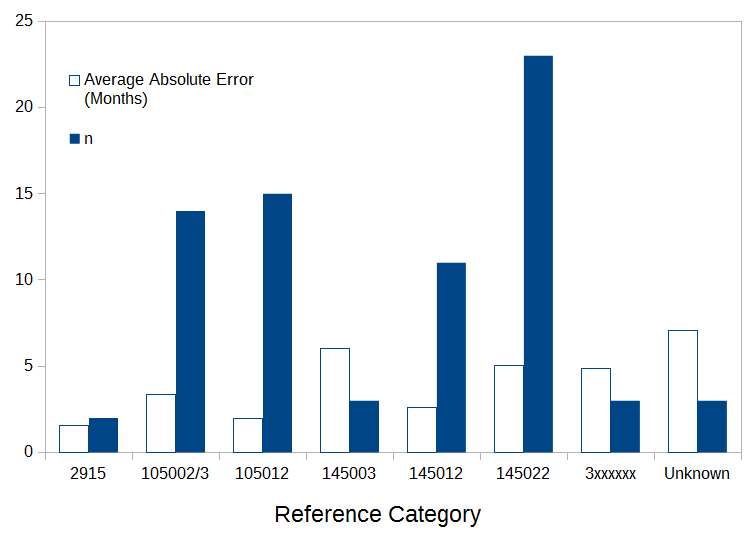

Regardless of the specific equation that is used, a benefit of the curve-fitting approach over the table is that it enables finer-grained prediction (eg., down to the month). So how did the new model fare in predicting production month? Across the whole data set, the average absolute error was just 3.71 months. However, the maximum error was a far-less flattering 26.74 months (!!!) for a watch with a 4481xxxx serial number that was produced in April, 1980. The updated model predicted July, 1982 for this watch; Hartmann's table did even worse, estimating that the watch was produced some time in 1983. The next worst error from the model was just under half of this. Broken down in terms of time, the average absolute errors were 2.61 months, 7.28 months, and 4.59 months for 1957-69, 1970-79, and 1980-89 respectively. Broken down in terms of references, we get the following ...

Average absolute errors from the model were generally less than 6-7 months, which is an OK outcome.

What can we conclude from all of this? Well, we shouldn't read too much into attempts to validate a model using the same data set that was used to derive it. We need new cases. Ultimately, the process might just not be quite simple enough to be characterised by a curve of this kind across all relevant time periods/references and we may have to augment the model with other information. However, on the positive side, there's nothing in the updated data that invalidates the general approach. There's probably still fruitful ground here.

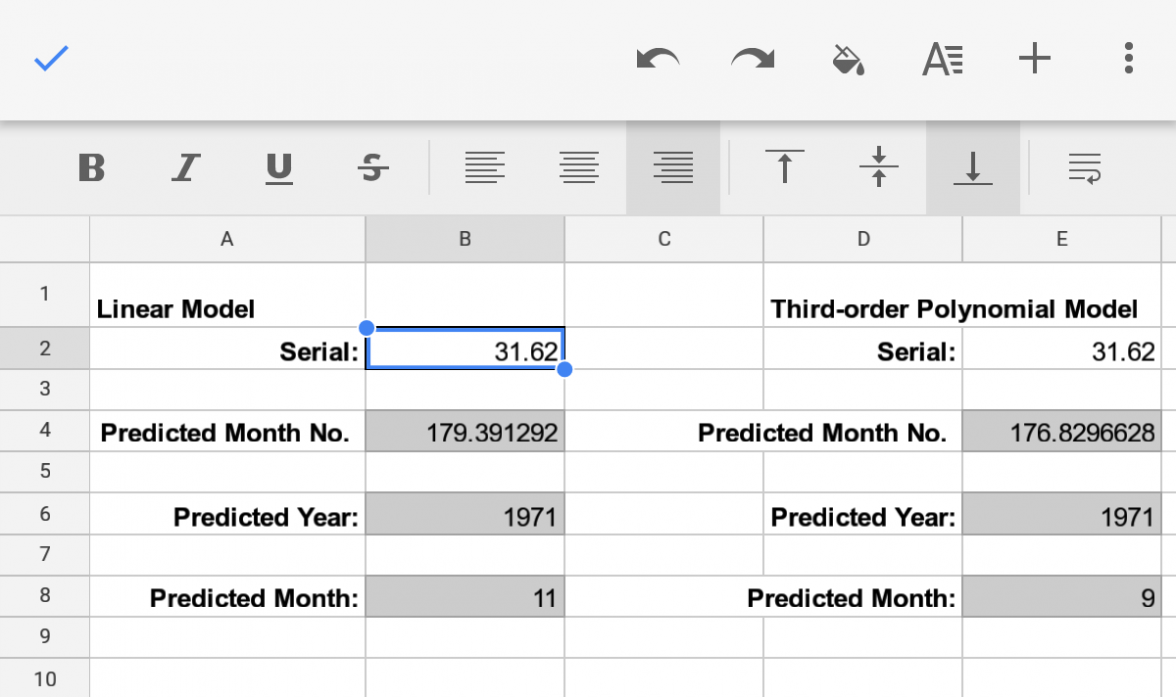

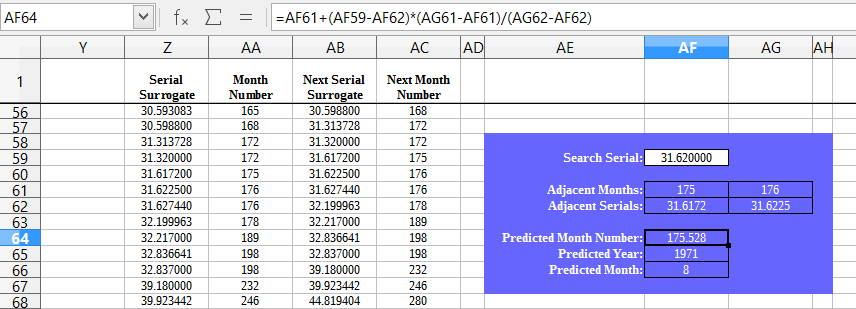

In the interests of error checking and to enable folks to try this out for themselves, here are the updated calculations (where x is the serial number in the form xx.xxxxxx and z is the predicted month number):

Predicted month number (z) = (0.0142174588*(x^3))-(1.3104154822*(x^2))+(47.0857686248*x)-451.3140730771

Predicted calendar year = 1957+ROUNDDOWN((ROUND(z-1))/12,0)

Predicted calendar month = MOD(ROUND(z-1),12)+1

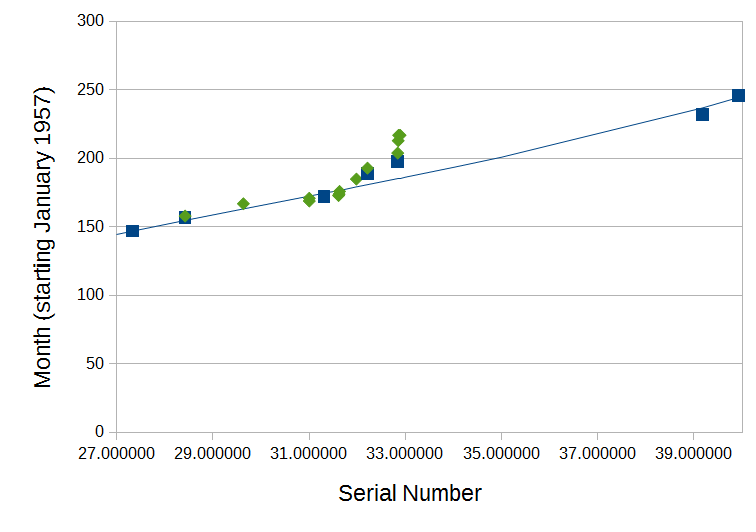

BREAKING NEWS: Yesterday as I was preparing this post, I was sent a list of 18 new dates and partial serial numbers, mostly from watches in the early 70s with serials in the low 30 millions. I tested the new model against these numbers and both the table and the model performed poorly. Indeed, sadly, for the first time, the table slightly outperformed the model

😵💫 I'm corresponding with the member who sent me the data to see if we can figure out what might be driving this poor performance.