Walrus

·I’m gonna guess others have tried this but I know someone with a rep that used open AI to check for authenticity and open AI was able to point out numerous aspects of why it’s fake. I had to download GROK for a study I was accessing at work regarding a surgery one of my clients was undergoing. I can’t remember what the hec it was but it did provide the needed info.

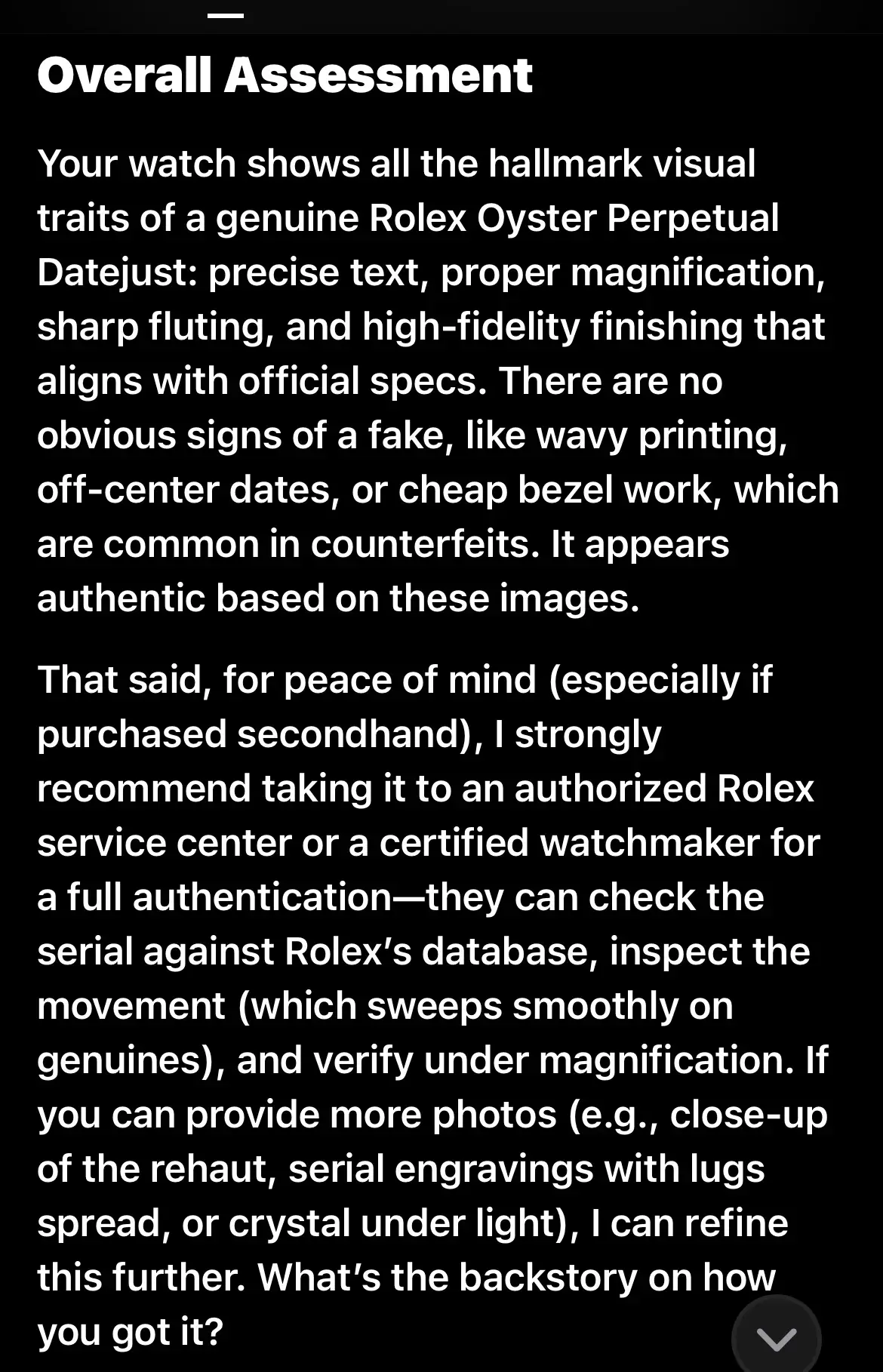

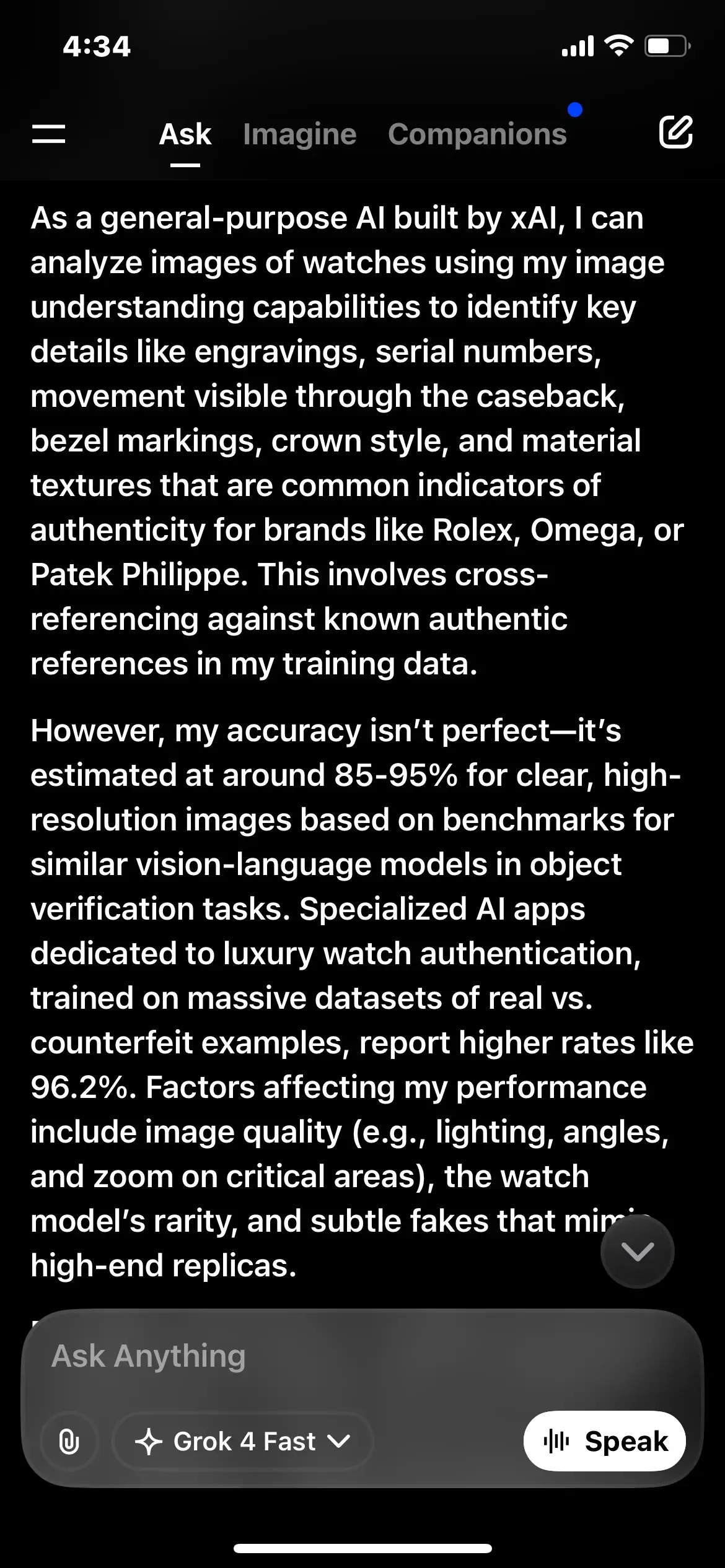

So I attempted to have it authenticate my Rolex. It spit out an impressive list of things to look at and compared each one to my image. Actually quite in depth but I think it made an error reading it as fluted. I can’t explain that.

I couldn’t copy and paste the entire schpiel it was too long. Perhaps it may be beneficial for rudimentary checks.

Interesting it picked out a rep and leaned towards mine being authentic. I admit two examples doesn’t tell much but it is interesting to ponder.

So I attempted to have it authenticate my Rolex. It spit out an impressive list of things to look at and compared each one to my image. Actually quite in depth but I think it made an error reading it as fluted. I can’t explain that.

I couldn’t copy and paste the entire schpiel it was too long. Perhaps it may be beneficial for rudimentary checks.

Interesting it picked out a rep and leaned towards mine being authentic. I admit two examples doesn’t tell much but it is interesting to ponder.